ChatGPT is one of the most talked about technology ever since its release. It totally redefined everything from the software developers’ standpoint to the user experience of AI. The bot is able to do plenty of things such as fast search, higher human skill, and many more but most importantly, it is able to code. As ChatGPTs are used globally, it leads to the increased demand as the large numbers of people want to talk to it, which can make it quite busy and this in turn, causes wait time to increase before it is available to use. Additionally, for some parts of the code, the smart contract fails to produce meaningful information. Its basic database going up to just 2021 gives an idea about its limited knowledge.

In the last couple of years, the field of natural language processing (NLP) has gotten through a real abundance of important development accomplishments, among them the emergence of the largest language models, like GPT-3.5. These neural networks, after being analyzed upon big datasets, have responded to the public with such clear and sometimes even human-like texts. On the other hand, the availability and ethical reservations surrounding the usage of proprietary tools already in place like GPT-3.5 have brought about the search for better models using open-source parameters. In this article, we will explore 10 different GPT-3.5 Open-Source Alternatives in the world of technology, analyzing their architecture, functionality, and possible purposes.

List Of GPT-3.5 Open-Source Alternatives

1. BERT (Bidirectional Encoder Representation from Transformers)

Designed by Google, BERT (stands for Bidirectional Encoder Representations from Transformers) has turned out to be one of the most prominent and attention-grabbing open-source language models. Unlike autoregressive models like GPT-3.5, BERT is bidirectional. This provides for a context that is richer by allowing it to capture context from both the words preceding and the words following it. It is this ability that makes this tool particularly suitable for tasks such as sentiment analysis, entity recognition, and question-answering.

2. BLOOM (BigScience)

BLOOM is committed to the highest standards of transparency including open access if data gathering is used to train the model, the drawbacks of awaking and reviewing such model work, and criteria used in assessing the model’s performance. Insiders can thus see in depth what is BLOOM makes possible and they can be sure they deal with one of the most transparent LLM’s machine-learning models. Apart from that, the BLOOM model is live now and will be free for the users. Moreover, users have a variety of language models from different tech firms to choose from so that they may select the one that suits their hardware specifications and needs.

3. XLNet

XLNet, a contribution from Google, was the first neuro-linguistic algorithm to implement the permutation language modeling approach, which removes the autoregressive constraints normally found in models like GPT-3.5. XLNet accomplishes this by analyzing all possible variations of a given sequence; thus, it becomes the best model across diverse NLP tasks. Individuals are able to conduct research, and continuous experimentation is easily achieved due to the software development source being open.

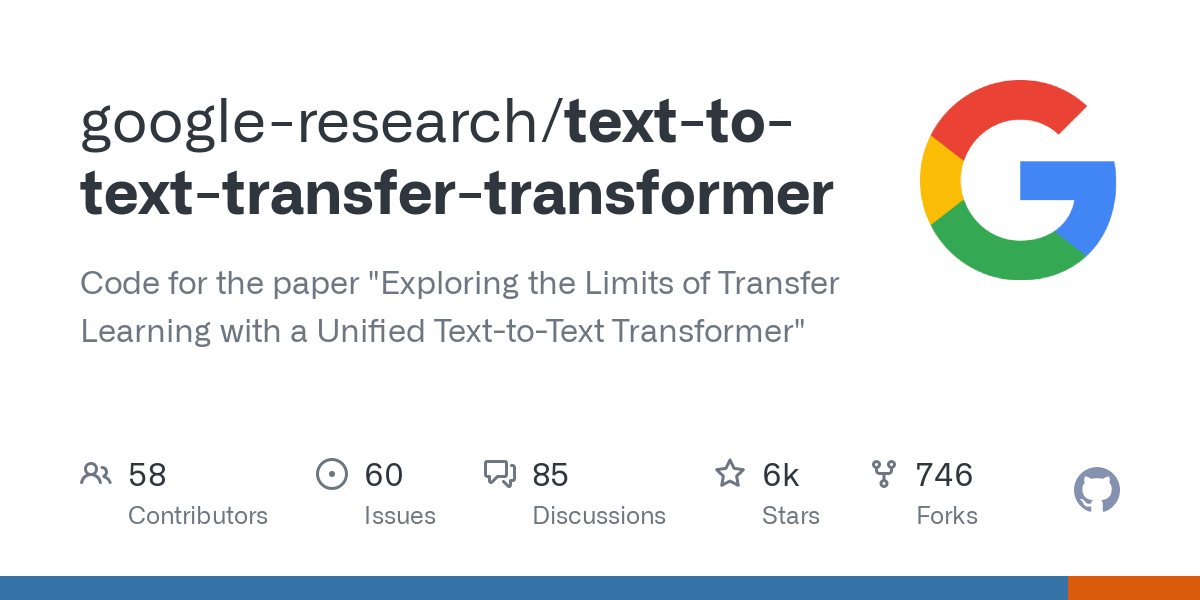

4. T5 (Text-To-Text Transformer Transformer)

The T5 is the first-ever NLP system developed by Google that tackles the issue of text-to-text problems in a unique manner. Such a macroscopic framework facilitates uniform training and deployment procedures for different tasks involving translation, summarization, and text generation. By being open-source, T5 has the ability to welcome cross-chain and cross-learning partnerships between groups and individuals within the NLP circles.

5. RoBERTa

-50966a79724c290f.png)

RoBERTa, which was designed by Facebook AI Research, has the same architecture as BERT and on top of that improved the training process and hyperparameters for optimization. With a significant increase in performance, RoBERTa surpasses the benchmarks for NLP. Open-ended architecture and open-source implementation make it possible for researchers and practitioners to create specialized versions of the model for different tasks and areas of application.

6. ALBERT (A Lite BERT)

ALBERT by Google made it feasible to have language models that were computationally efficient which is a major barrier for big-scale language models as it reduced the size and complexity of the model. ALBERT, although it is architecturally compact, is able to keep up with the leading performance on diversified NLP tasks. Its open-code principle which enables the easy incorporation of new datasets as well as the creation of various professional applications is what makes it very popular.

7. Electra

Instead, Electra is a new pre-training strategy that uses substitutions that replace some of the real tokens with the wrong ones perplexing the model to recognize original and substituted tokens. This adversarial training procedure is thus the engine that drives the creation of linguistic models that are more efficient and effective. Electra is an open-source implementation, thus there are more chances of exploring this advanced technique by other researchers.

Also Read: Top 10 Open Source Intelligence Examples

8. DistilBERT

One of the great features of Hugging Face's DistilBERT is that it is the distilled version of BERT, which means that they have retained many of BERT's performances without compromising model size and computation needs. By using this lightweight alternative, the process of deployment becomes effective even in the necessities of resource-starved environments with precision not getting eroded. Through open-source distribution, it becomes possible for the spread of IOT technology to be successful and to respond to the unique challenges set by the market.

9. BART (Bidirectional and Auto-Regressive Transformers)

-41466a797250f668.png)

BART or Bidirectional Encoder for Representations in Transformation (Vul), one of Facebook AI's projects, is a sequence-to-sequence model accomplished to perform text processing tasks such as summarization, translation, and text generation. When it comes to its capabilities of incorporating bidirectional along with autoregressive architectures, BART comes up with amazing results that cut across various domains. Collaboration and innovation in sequence modeling are enhanced through its open source implementation. The building of new and innovative learning tools will continue to be the key to students’ success.

10. Fairseq

Fairseq, the open-source toolkit which was developed by Facebook AI Research, has versions of various sequence to sequence models, with a transformer being an example. Fairseq having both flexibility and extensibility characteristics, offers abundant opportunities for NLP tasks and research projects. Its community participation and innovation are what drive the open-source character of quantum computing.

Similar to this: The Future of Open Source in the Era of Chat GPT-4

What Are The Benefits Of Using GPT-3.5 Open-Source Alternatives?

Using GPT-3.5 open-source alternatives offers several benefits:

- Customization: Users can fine-tune the models by means of the appropriate algorithms based on their specific needs and respective data sets, which allows for designing solutions for a wide variety of applications.

- Transparency: Open-source models can be seen as a good practice since they give users insight into the structure and use data for training, which helps build trust and understanding.

- Cost-effectiveness: The reason behind this is that by using the community-driven open-source options, they can deny themselves the expensive licensing fee problems that proprietary product lines may include (for instance, GPT-3.5).

- Community Collaboration: For community-based open-source projects, the benefits of community contributions can become a reality. Further improvements, bug fixes, and new features are included.

- Ethical Considerations: Open-source alternatives to that problem provide or eliminate the chances of data privacy and ethical usage, and give a better opportunity to users to control their data and model's plan.

Wrap Up

After all, an overview of the alternative technologies to the GPT-3.5 brings up a broad range of possibilities, each of the alternatives equipped with different features. Through bidirectional encoding like BERT, using permutation language modeling to build XLNet, and adversarial training like Electra, these alternatives are seen as precious resources for moving both NLP research and applications forward. Through cooperation and creativity, open-source projects stimulate the democratization of AI technologies and thus enable researchers and practitioners around the globe to solve similar problems in their local contexts.

FAQs

No, There is no direct open-source equivalent to the GPT-3 language model, nonetheless, there are open-source alternatives of similar levels capable of the same tasks. For example, GPT-2, BERT, XLNet, and other language models offer identical features and functions as well as fine-tuning for various natural language processing tasks.

Indeed, a number of conversational AI open-source frameworks are available, including Rasa, DeepPavlov, and ChatterBot, to correspond to the different functionalities of ChatGPT or to provide customization options for more specific applications.

LargeScience Bloom indeed has an open-source alternative of gpt-3 and full access is available for free for innovation projects and enterprises. Bloom language model was given training of 176 billion parameters for 117 days with the supercomputer center of the FNSR (French National Center for Scientific Research).

ChatGPT 3.5 is not open-source, it is a proprietary technology meaning that its source code cannot be publicly accessed or modified without the permission of the developers.