Remember the days when writing was confined by the limitations of human imagination and time? The advent of GPT-3 has revolutionized the way we interact with words and AI. Nowadays, the days of repetitive and lackluster texts are gone; GPT-3’s innovative technology has transformed our methodologies to text generation that not only increased creativity but also improved its efficiency.

Since 2014, there has been a sharpening of competition in the artificial intelligence sphere as each one claimed to offer similar natural language processing capabilities like OpenAI’s GPT-3 which was released by it back in Ever since then many AI companies have kicked off Although GPT-3 is quite powerful, it has high costs that resulted in developers vying to produce similarly intelligent but cheaper Large scale Language Models also known as LLMs.

GPT3 allows writing content for almost anything – be it a blog, or whitepapers and many other kinds of things are covered by this. This trend has literally begun to gain traction, as a host of other platforms are also jumping into the competition. Other GPT3 open-source alternatives have recently launched in the market to meet this demand apart from ChatGPT. Read this blog, here you will learn about how this AI technology works and what kind of other GPT3 open-source alternatives are offered in the market.

What Is GPT-3?

On June 11, 2020, OpenAI San Francisco released its large language model GPT-3. The term GPT stands for Generative Pre Trained Transformer. It is the follow-up to GPT-2. It is a neural network ML model trained on about 570 GB of internet text such as public non-curated data from Common Crawl, Wikipedia, and other texts picked by OpenAI. Owing to this data, GPT-3 can create responses with human-like intelligence from text inputs of just about any size. It can answer questions in writing essays, add almost any programming language, write computer codes, summarize long text, and even translate different languages into one.

GPT-3, on the one hand, is a predictive language model that can process text inputs to come up with what potential next inputs they would represent most likely. To a large extent, the quality and quantity of training data used to train these ML models also dictate how accurate their predictions will be. A good and vast data set will provide more accurate predictions. Since GPT-3 was released, OpenAI has also made continuous efforts to improve and update the code behind this program in order for it to produce less toxic answers which generally reduce harmful as well as disingenuous language. GPT-3 is the engine behind other OpenAI applications.

Top 5 GPT-3 Open Source Alternatives

There is no direct open-source counterpart to GPT 3 that scales and has the same capabilities. However, here are five notable open-source language models and frameworks that offer powerful natural language processing capabilities:

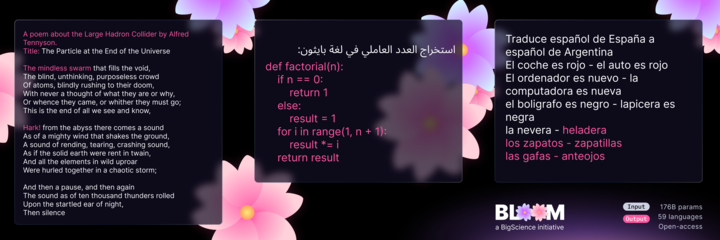

Bloom (Big Science)

BLOOM is certainly the best candidate for ChatGPT as of now when it comes to generating quality content with AI technology. Developed with about 176 billion data parameters approximately, it was created by BigScience. This is greater than the number of parameters employed in ChatGPT which amounts to around 175 billion data sets. This application is made in the supercomputing center of the French National Center for Scientific Research. So, it is a full platform where you can get more than 40 languages. Though the primary language used is English, but can be varied according to needed functional requirements.

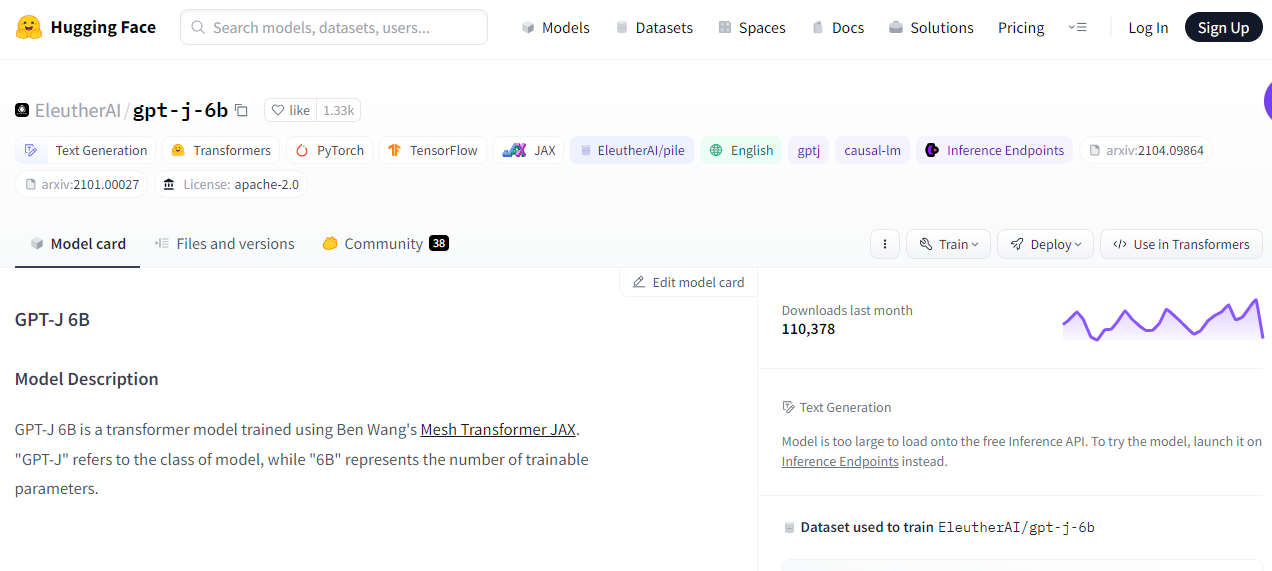

GPT-J And GPT-Neo (EluetherAl)

GPT-J is an open source small 6B parameter autoregressive model for text generation that can be used without any cost. It was trained on The Pile, which includes 22 sets of more than 800 GB texts in English. The model is small but carries out the exact performance that GPT-3 6.7B -param does, and it even surpasses its predecessor –GPT-Neo. It had 2 versions of 1.3 Billy and another one is about NeoX with GPT-Neo solely consisting it About February

Megatron-Turing NLG (NVIDIA and Microsoft)

-96865af707d772aa.png)

Megatron-Turing Natural Language Generation (NLG) is currently the largest and most powerful monolithic LLM trained on over 530 billion parameters. NVIDIA and Microsoft trained the LLM on The Pile – an 800GB dataset made up of around 22 high-quality smaller datasets through a custom supercomputer built using the DGX SuperPOD described by some as one of the fastest computers in existence with its ability to achieve just over trillion computations per second, Currently, Early Access to Megatron is offered with an invitation-only based approach from organizations that have research objectives approved by NVIDIA and Microsoft.

Bert (Google)

-93365af707d80858.png)

BERT stands for Bidirectional Encoder Representations from Transformers and it is an autoregressive language model created by Google. It is one of the oldest transfer-based languages, which in 2018 became open source and trained with text from Wikipedia. Google still treats it as a benchmark to gain more insights into the search intent and enhance natural language generation. BERT is a bidirectional, unsupervised language model that involves the consideration of past-text input context and possible conditions under which it might follow in order to give appropriate text predictions.

LaMDA (Google)

LaMDA is a decoder-only, autoregressive Language Model for Dialog Applications. Besides chit-chatting on various topics, the model may also make lists and can be taught to discuss some topic-based domains. Dialog models easily increase their size and are able to handle longer dependencies. This means that they can consider the previous background, not only the current input. Also, they support domain grounding.

Things To Consider Before Using GPT-3 Open Source Alternative

Before selecting alternatives to GPT-3, there are several aspects that need to be considered in order for this model’s selection would address your individual needs and requirements. Here are key aspects to consider:

-

Task Suitability: Different models of language vary in doing tasks with natural languages. First, consider which particular tasks you need the model for – text completion, Summary, Translation, Question answering, or sentiment analysis. Select an option that performs well in the tasks associated with your application.

-

Model Architecture: There are variations and improvements in subsequent models, but GPT-3 is based on the Transformer architecture. Assess the architecture of other models to know how good they are, their weaknesses and strengths as well suitability for your use case. For instance, BERT approaches bidirectional context while T5 proposes tasks as text-to-text problems.

-

Model Size and Resource Requirements: The size of a given language model determines its computation needs. Smaller models may be better suited for applications with a limited number of resources or faster inference times. Consider the model size versus performance for your specific use case.

-

Open-Source Availability: Verify if the alternative is open source. Open-source models are more transparent, can be personalized, and promote community engagement. Like GPT-2, BERT and others have open source implementations.

-

Training Data and Pre-training Approach: The quality and variety of training data impact the performance of language models. Know the origins and kinds of data used for pre-training. So, some models such as BERT apply masked language modeling while other ones like GPT use autoregressive language modeling.

-

Integration and API Availability: Evaluate the feasibility of implementing the alternative model in your application or workflow. Some models provide APIs for easy integration, like GPT-3’s API offered by OpenAI.

-

Performance Benchmarks: Review the benchmarks for performance based on standard datasets related to your tasks. Seek for similarities with other models and evaluate how good the alternative was in terms of accuracy, speed, and efficiency – both hardware and software.

Final Words

We have now covered a few of the top GPT3 open-source alternatives on the market, which brings this blog post to a close. These platforms are gaining a lot of attention these days as it's such a cool concept to create content with the help of potent AI software. Although ChatGPT is becoming more and more popular, there are a few other open-source GPT3 substitutes that you should be aware of. We've explained a few of them in this article so you can see that there's increasing market competition and a rapid influx of new platforms.

FAQs

Many open-source alternatives to GPT-3 exist; open-source language models and frameworks have been developed by organizations like OpenAI and Google to provide similar functionality. Examples include GPT-2, released by OpenAI, and models like BERT and T5.

Bloom is better than GPT-3. Bloom emerged as a powerful large language model that meets the increasing demands of natural language processing and generation as a standout among GPT3 alternatives. It is considered the best alternative to GPT-3 due to its capability to handle a wide range of natural language tasks.

Generally, access to GPT-3 is not free of charge; OpenAI provides GPT-3 as a paid service and uses the model to involve certain expenses. GPT-3 can be embedded in applications and services via OpenAI’s API (Application Programming Interface), but accessing the service requires a fee. OpenAI provides pricing details. However, OpenAI periodically announces research previews and promotions through which users may get limited access or reduced pricing for using GPT-63; however, these offers are generally temporary in nature with certain specific terms and conditions provided by Open AI.

GPT-4 is the latest Generative AI created by OpenAI. It is transforming the terrain of work. 4 is not open-source, so we can’t see the code model architecture data or weights of a model to reproduce their results.